备注

Go to the end 下载完整的示例代码。或者通过浏览器中的MysterLite或Binder运行此示例

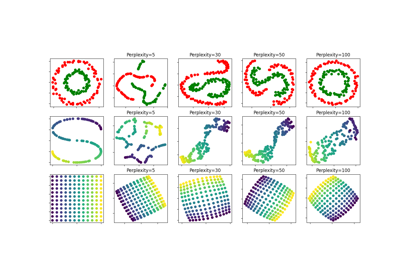

TSNE的大约最近邻居#

此示例展示了如何在管道中链接KNeighborsTransformer和TSNE。它还展示了如何包装包裹 nmslib 和 pynndescent 替换KNeighborsTransformer并执行近似最近邻。这些软件包可以通过 pip install nmslib pynndescent .

注意:在KNeighborsTransformer中,我们使用的定义包括每个训练点作为其自己的邻居,计数 n_neighbors ,出于兼容性原因,在以下情况下计算一个额外的邻居 mode == 'distance' .请注意,我们在拟议的 nmslib wrapper.

# Authors: The scikit-learn developers

# SPDX-License-Identifier: BSD-3-Clause

首先,我们尝试导入包并警告用户以防丢失。

import sys

try:

import nmslib

except ImportError:

print("The package 'nmslib' is required to run this example.")

sys.exit()

try:

from pynndescent import PyNNDescentTransformer

except ImportError:

print("The package 'pynndescent' is required to run this example.")

sys.exit()

我们定义了一个包装类,用于将scikit-learn API实现到 nmslib ,以及加载功能。

import joblib

import numpy as np

from scipy.sparse import csr_matrix

from sklearn.base import BaseEstimator, TransformerMixin

from sklearn.datasets import fetch_openml

from sklearn.utils import shuffle

class NMSlibTransformer(TransformerMixin, BaseEstimator):

"""Wrapper for using nmslib as sklearn's KNeighborsTransformer"""

def __init__(self, n_neighbors=5, metric="euclidean", method="sw-graph", n_jobs=-1):

self.n_neighbors = n_neighbors

self.method = method

self.metric = metric

self.n_jobs = n_jobs

def fit(self, X):

self.n_samples_fit_ = X.shape[0]

# see more metric in the manual

# https://github.com/nmslib/nmslib/tree/master/manual

space = {

"euclidean": "l2",

"cosine": "cosinesimil",

"l1": "l1",

"l2": "l2",

}[self.metric]

self.nmslib_ = nmslib.init(method=self.method, space=space)

self.nmslib_.addDataPointBatch(X.copy())

self.nmslib_.createIndex()

return self

def transform(self, X):

n_samples_transform = X.shape[0]

# For compatibility reasons, as each sample is considered as its own

# neighbor, one extra neighbor will be computed.

n_neighbors = self.n_neighbors + 1

if self.n_jobs < 0:

# Same handling as done in joblib for negative values of n_jobs:

# in particular, `n_jobs == -1` means "as many threads as CPUs".

num_threads = joblib.cpu_count() + self.n_jobs + 1

else:

num_threads = self.n_jobs

results = self.nmslib_.knnQueryBatch(

X.copy(), k=n_neighbors, num_threads=num_threads

)

indices, distances = zip(*results)

indices, distances = np.vstack(indices), np.vstack(distances)

indptr = np.arange(0, n_samples_transform * n_neighbors + 1, n_neighbors)

kneighbors_graph = csr_matrix(

(distances.ravel(), indices.ravel(), indptr),

shape=(n_samples_transform, self.n_samples_fit_),

)

return kneighbors_graph

def load_mnist(n_samples):

"""Load MNIST, shuffle the data, and return only n_samples."""

mnist = fetch_openml("mnist_784", as_frame=False)

X, y = shuffle(mnist.data, mnist.target, random_state=2)

return X[:n_samples] / 255, y[:n_samples]

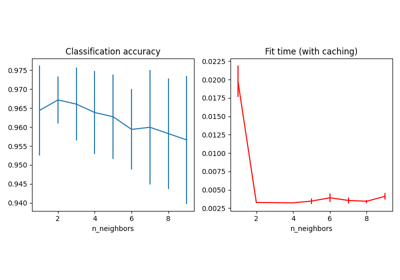

我们对不同的精确/大约最近邻变压器进行基准测试。

import time

from sklearn.manifold import TSNE

from sklearn.neighbors import KNeighborsTransformer

from sklearn.pipeline import make_pipeline

datasets = [

("MNIST_10000", load_mnist(n_samples=10_000)),

("MNIST_20000", load_mnist(n_samples=20_000)),

]

n_iter = 500

perplexity = 30

metric = "euclidean"

# TSNE requires a certain number of neighbors which depends on the

# perplexity parameter.

# Add one since we include each sample as its own neighbor.

n_neighbors = int(3.0 * perplexity + 1) + 1

tsne_params = dict(

init="random", # pca not supported for sparse matrices

perplexity=perplexity,

method="barnes_hut",

random_state=42,

n_iter=n_iter,

learning_rate="auto",

)

transformers = [

(

"KNeighborsTransformer",

KNeighborsTransformer(n_neighbors=n_neighbors, mode="distance", metric=metric),

),

(

"NMSlibTransformer",

NMSlibTransformer(n_neighbors=n_neighbors, metric=metric),

),

(

"PyNNDescentTransformer",

PyNNDescentTransformer(

n_neighbors=n_neighbors, metric=metric, parallel_batch_queries=True

),

),

]

for dataset_name, (X, y) in datasets:

msg = f"Benchmarking on {dataset_name}:"

print(f"\n{msg}\n" + str("-" * len(msg)))

for transformer_name, transformer in transformers:

longest = np.max([len(name) for name, model in transformers])

start = time.time()

transformer.fit(X)

fit_duration = time.time() - start

print(f"{transformer_name:<{longest}} {fit_duration:.3f} sec (fit)")

start = time.time()

Xt = transformer.transform(X)

transform_duration = time.time() - start

print(f"{transformer_name:<{longest}} {transform_duration:.3f} sec (transform)")

if transformer_name == "PyNNDescentTransformer":

start = time.time()

Xt = transformer.transform(X)

transform_duration = time.time() - start

print(

f"{transformer_name:<{longest}} {transform_duration:.3f} sec"

" (transform)"

)

样本输出::

Benchmarking on MNIST_10000:

----------------------------

KNeighborsTransformer 0.007 sec (fit)

KNeighborsTransformer 1.139 sec (transform)

NMSlibTransformer 0.208 sec (fit)

NMSlibTransformer 0.315 sec (transform)

PyNNDescentTransformer 4.823 sec (fit)

PyNNDescentTransformer 4.884 sec (transform)

PyNNDescentTransformer 0.744 sec (transform)

Benchmarking on MNIST_20000:

----------------------------

KNeighborsTransformer 0.011 sec (fit)

KNeighborsTransformer 5.769 sec (transform)

NMSlibTransformer 0.733 sec (fit)

NMSlibTransformer 1.077 sec (transform)

PyNNDescentTransformer 14.448 sec (fit)

PyNNDescentTransformer 7.103 sec (transform)

PyNNDescentTransformer 1.759 sec (transform)

注意到 PyNNDescentTransformer 第一次需要更多时间 fit 和第一 transform 由于numba及时编译器的负担。但在第一次调用之后,已编译的Python代码由numba保存在缓存中,并且后续调用不会受到初始负载的影响。两 KNeighborsTransformer 和 NMSlibTransformer 在这里只运行一次,因为它们会显示出更稳定 fit 和 transform 次(它们没有PyNNDescentTransformer的冷启动问题)。

import matplotlib.pyplot as plt

from matplotlib.ticker import NullFormatter

transformers = [

("TSNE with internal NearestNeighbors", TSNE(metric=metric, **tsne_params)),

(

"TSNE with KNeighborsTransformer",

make_pipeline(

KNeighborsTransformer(

n_neighbors=n_neighbors, mode="distance", metric=metric

),

TSNE(metric="precomputed", **tsne_params),

),

),

(

"TSNE with NMSlibTransformer",

make_pipeline(

NMSlibTransformer(n_neighbors=n_neighbors, metric=metric),

TSNE(metric="precomputed", **tsne_params),

),

),

]

# init the plot

nrows = len(datasets)

ncols = np.sum([1 for name, model in transformers if "TSNE" in name])

fig, axes = plt.subplots(

nrows=nrows, ncols=ncols, squeeze=False, figsize=(5 * ncols, 4 * nrows)

)

axes = axes.ravel()

i_ax = 0

for dataset_name, (X, y) in datasets:

msg = f"Benchmarking on {dataset_name}:"

print(f"\n{msg}\n" + str("-" * len(msg)))

for transformer_name, transformer in transformers:

longest = np.max([len(name) for name, model in transformers])

start = time.time()

Xt = transformer.fit_transform(X)

transform_duration = time.time() - start

print(

f"{transformer_name:<{longest}} {transform_duration:.3f} sec"

" (fit_transform)"

)

# plot TSNE embedding which should be very similar across methods

axes[i_ax].set_title(transformer_name + "\non " + dataset_name)

axes[i_ax].scatter(

Xt[:, 0],

Xt[:, 1],

c=y.astype(np.int32),

alpha=0.2,

cmap=plt.cm.viridis,

)

axes[i_ax].xaxis.set_major_formatter(NullFormatter())

axes[i_ax].yaxis.set_major_formatter(NullFormatter())

axes[i_ax].axis("tight")

i_ax += 1

fig.tight_layout()

plt.show()

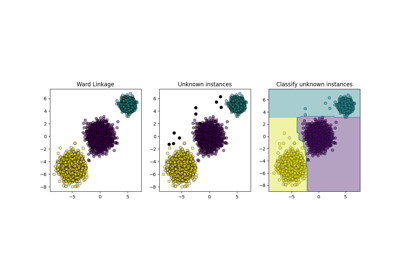

样本输出::

Benchmarking on MNIST_10000:

----------------------------

TSNE with internal NearestNeighbors 24.828 sec (fit_transform)

TSNE with KNeighborsTransformer 20.111 sec (fit_transform)

TSNE with NMSlibTransformer 21.757 sec (fit_transform)

Benchmarking on MNIST_20000:

----------------------------

TSNE with internal NearestNeighbors 51.955 sec (fit_transform)

TSNE with KNeighborsTransformer 50.994 sec (fit_transform)

TSNE with NMSlibTransformer 43.536 sec (fit_transform)

我们可以观察到, TSNE 估计器及其内部 NearestNeighbors 实现大致相当于管道 TSNE 和 KNeighborsTransformer 在性能方面。这是意料之中的,因为两条管道内部依赖相同的 NearestNeighbors 执行精确邻居搜索的实现。近似 NMSlibTransformer 已经比在最小数据集上的精确搜索稍快,但这种速度差异预计将在样本数量较多的数据集上变得更加显着。

然而请注意,并非所有近似搜索方法都能保证提高默认精确搜索方法的速度:事实上,自scikit-learn 1.1以来,精确搜索实现显着改进。此外,暴力精确搜索方法不需要在 fit 时间因此,为了在环境中获得整体性能改进 TSNE 管道中,大约搜索的收益 transform 需要比在以下位置建立大致搜索索引所花费的额外时间长 fit 时间

最后,TSNE算法本身也是计算密集型的,无论最近邻搜索如何。因此,将最近邻居搜索步骤加快5倍不会导致整个管道的速度加快5倍。

相关实例

Gallery generated by Sphinx-Gallery <https://sphinx-gallery.github.io> _