备注

Go to the end 下载完整的示例代码。或者通过浏览器中的MysterLite或Binder运行此示例

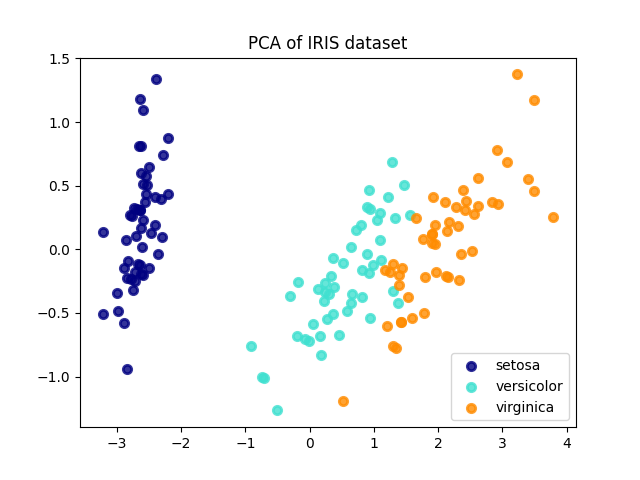

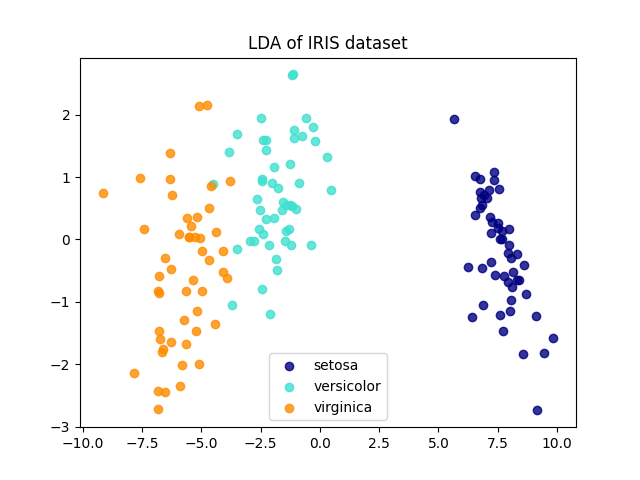

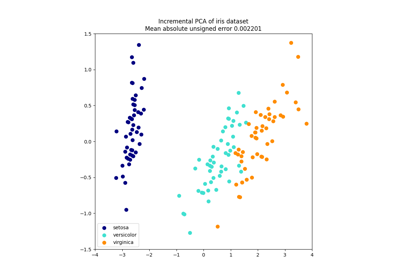

Iris数据集LDA和PCA 2D投影的比较#

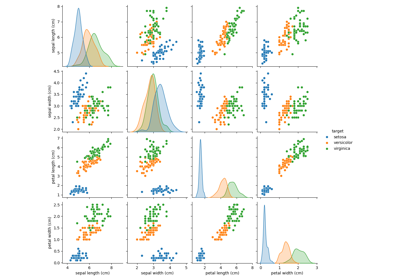

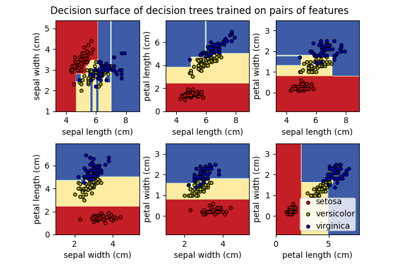

Iris数据集代表3种Iris花(Setosa,Versicolour和Virginica),具有4个属性:萼片长度,萼片宽度,花瓣长度和花瓣宽度。

应用于此数据的主成分分析(PCA)识别了占数据中最大方差的属性组合(主成分或特征空间中的方向)。在这里,我们将不同的样本绘制在2个第一主成分上。

线性鉴别分析(LDA)尝试识别导致最大方差的属性 between classes .特别是,与PCA相反,LDA是一种使用已知类标签的监督方法。

explained variance ratio (first two components): [0.92461872 0.05306648]

# Authors: The scikit-learn developers

# SPDX-License-Identifier: BSD-3-Clause

import matplotlib.pyplot as plt

from sklearn import datasets

from sklearn.decomposition import PCA

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

iris = datasets.load_iris()

X = iris.data

y = iris.target

target_names = iris.target_names

pca = PCA(n_components=2)

X_r = pca.fit(X).transform(X)

lda = LinearDiscriminantAnalysis(n_components=2)

X_r2 = lda.fit(X, y).transform(X)

# Percentage of variance explained for each components

print(

"explained variance ratio (first two components): %s"

% str(pca.explained_variance_ratio_)

)

plt.figure()

colors = ["navy", "turquoise", "darkorange"]

lw = 2

for color, i, target_name in zip(colors, [0, 1, 2], target_names):

plt.scatter(

X_r[y == i, 0], X_r[y == i, 1], color=color, alpha=0.8, lw=lw, label=target_name

)

plt.legend(loc="best", shadow=False, scatterpoints=1)

plt.title("PCA of IRIS dataset")

plt.figure()

for color, i, target_name in zip(colors, [0, 1, 2], target_names):

plt.scatter(

X_r2[y == i, 0], X_r2[y == i, 1], alpha=0.8, color=color, label=target_name

)

plt.legend(loc="best", shadow=False, scatterpoints=1)

plt.title("LDA of IRIS dataset")

plt.show()

Total running time of the script: (0分0.143秒)

相关实例

Gallery generated by Sphinx-Gallery <https://sphinx-gallery.github.io> _