备注

Go to the end 下载完整的示例代码。或者通过浏览器中的MysterLite或Binder运行此示例

单调约束#

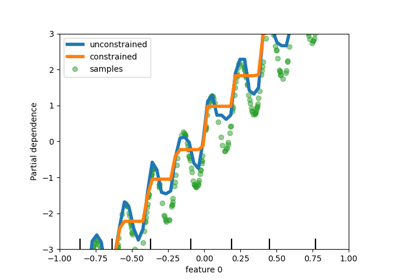

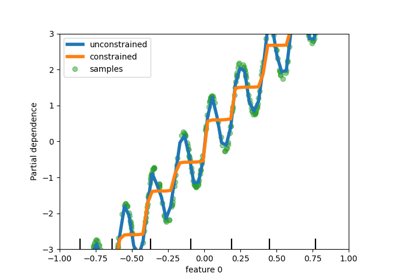

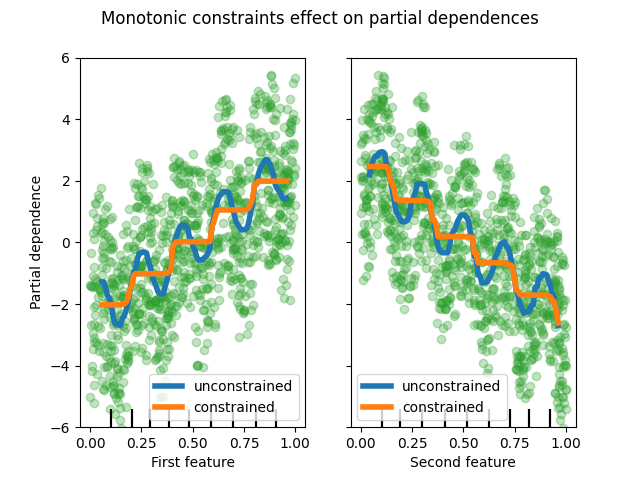

此示例说明了单调约束对梯度增强估计器的影响。

我们构建了一个人工数据集,其中目标值通常与第一个特征正相关(具有一些随机和非随机变化),而通常与第二个特征负相关。

通过在学习过程中对特征分别施加单调增加或单调减少约束,估计器能够正确地遵循一般趋势,而不是受到变化的影响。

这个例子的灵感来自于 XGBoost documentation .

# Authors: The scikit-learn developers

# SPDX-License-Identifier: BSD-3-Clause

import matplotlib.pyplot as plt

import numpy as np

from sklearn.ensemble import HistGradientBoostingRegressor

from sklearn.inspection import PartialDependenceDisplay

rng = np.random.RandomState(0)

n_samples = 1000

f_0 = rng.rand(n_samples)

f_1 = rng.rand(n_samples)

X = np.c_[f_0, f_1]

noise = rng.normal(loc=0.0, scale=0.01, size=n_samples)

# y is positively correlated with f_0, and negatively correlated with f_1

y = 5 * f_0 + np.sin(10 * np.pi * f_0) - 5 * f_1 - np.cos(10 * np.pi * f_1) + noise

在此数据集上不受任何限制地适应第一个模型。

gbdt_no_cst = HistGradientBoostingRegressor()

gbdt_no_cst.fit(X, y)

在此数据集上分别采用单调增加(1)和单调减少(-1)约束来适应第二个模型。

gbdt_with_monotonic_cst = HistGradientBoostingRegressor(monotonic_cst=[1, -1])

gbdt_with_monotonic_cst.fit(X, y)

让我们显示预测对这两个特征的部分依赖性。

fig, ax = plt.subplots()

disp = PartialDependenceDisplay.from_estimator(

gbdt_no_cst,

X,

features=[0, 1],

feature_names=(

"First feature",

"Second feature",

),

line_kw={"linewidth": 4, "label": "unconstrained", "color": "tab:blue"},

ax=ax,

)

PartialDependenceDisplay.from_estimator(

gbdt_with_monotonic_cst,

X,

features=[0, 1],

line_kw={"linewidth": 4, "label": "constrained", "color": "tab:orange"},

ax=disp.axes_,

)

for f_idx in (0, 1):

disp.axes_[0, f_idx].plot(

X[:, f_idx], y, "o", alpha=0.3, zorder=-1, color="tab:green"

)

disp.axes_[0, f_idx].set_ylim(-6, 6)

plt.legend()

fig.suptitle("Monotonic constraints effect on partial dependences")

plt.show()

我们可以看到,无约束模型的预测捕捉了数据的振荡,而约束模型则遵循一般趋势并忽略了局部变化。

使用要素名称指定单调约束#

请注意,如果训练数据具有特征名称,则可以通过传递字典来指定单调约束:

import pandas as pd

X_df = pd.DataFrame(X, columns=["f_0", "f_1"])

gbdt_with_monotonic_cst_df = HistGradientBoostingRegressor(

monotonic_cst={"f_0": 1, "f_1": -1}

).fit(X_df, y)

np.allclose(

gbdt_with_monotonic_cst_df.predict(X_df), gbdt_with_monotonic_cst.predict(X)

)

True

Total running time of the script: (0分0.406秒)

相关实例

Gallery generated by Sphinx-Gallery <https://sphinx-gallery.github.io> _