备注

Go to the end 下载完整的示例代码。或者通过浏览器中的MysterLite或Binder运行此示例

用于文本特征提取和评估的样本管道#

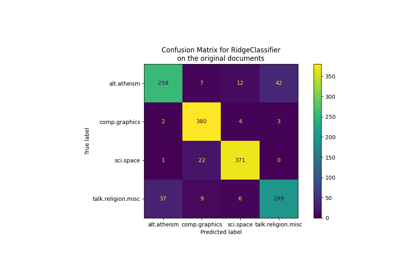

此示例中使用的数据集是 20个新闻组文本数据集 它将被自动下载、缓存和重新用于文档分类示例。

在这个示例中,我们使用 RandomizedSearchCV .有关其他一些分类器性能的演示,请参阅 使用稀疏特征对文本文档进行分类 笔记本

# Authors: The scikit-learn developers

# SPDX-License-Identifier: BSD-3-Clause

数据加载#

我们从训练集中加载两个类别。您可以通过将类别名称添加到列表或设置中来调整类别的数量 categories=None 当调用数据集加载器时 fetch_20newsgroups 才能得到其中20个。

from sklearn.datasets import fetch_20newsgroups

categories = [

"alt.atheism",

"talk.religion.misc",

]

data_train = fetch_20newsgroups(

subset="train",

categories=categories,

shuffle=True,

random_state=42,

remove=("headers", "footers", "quotes"),

)

data_test = fetch_20newsgroups(

subset="test",

categories=categories,

shuffle=True,

random_state=42,

remove=("headers", "footers", "quotes"),

)

print(f"Loading 20 newsgroups dataset for {len(data_train.target_names)} categories:")

print(data_train.target_names)

print(f"{len(data_train.data)} documents")

Loading 20 newsgroups dataset for 2 categories:

['alt.atheism', 'talk.religion.misc']

857 documents

具有超参数调整的管道#

我们定义了一个管道,将文本特征向量器与简单的分类器相结合,但对于文本分类有效。

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.naive_bayes import ComplementNB

from sklearn.pipeline import Pipeline

pipeline = Pipeline(

[

("vect", TfidfVectorizer()),

("clf", ComplementNB()),

]

)

pipeline

We define a grid of hyperparameters to be explored by the

RandomizedSearchCV. Using a

GridSearchCV instead would explore all the

possible combinations on the grid, which can be costly to compute, whereas the

parameter n_iter of the RandomizedSearchCV

controls the number of different random combination that are evaluated. Notice

that setting n_iter larger than the number of possible combinations in a

grid would lead to repeating already-explored combinations. We search for the

best parameter combination for both the feature extraction (vect__) and the

classifier (clf__).

import numpy as np

parameter_grid = {

"vect__max_df": (0.2, 0.4, 0.6, 0.8, 1.0),

"vect__min_df": (1, 3, 5, 10),

"vect__ngram_range": ((1, 1), (1, 2)), # unigrams or bigrams

"vect__norm": ("l1", "l2"),

"clf__alpha": np.logspace(-6, 6, 13),

}

在这种情况下 n_iter=40 并不是对超参数网格的详尽搜索。在实践中,增加参数会很有趣 n_iter 以获得更多信息量的分析。结果,计算时间增加。我们可以通过增加通过参数使用的中央处理器数量来利用参数组合评估的并行化来减少它 n_jobs .

from pprint import pprint

from sklearn.model_selection import RandomizedSearchCV

random_search = RandomizedSearchCV(

estimator=pipeline,

param_distributions=parameter_grid,

n_iter=40,

random_state=0,

n_jobs=2,

verbose=1,

)

print("Performing grid search...")

print("Hyperparameters to be evaluated:")

pprint(parameter_grid)

Performing grid search...

Hyperparameters to be evaluated:

{'clf__alpha': array([1.e-06, 1.e-05, 1.e-04, 1.e-03, 1.e-02, 1.e-01, 1.e+00, 1.e+01,

1.e+02, 1.e+03, 1.e+04, 1.e+05, 1.e+06]),

'vect__max_df': (0.2, 0.4, 0.6, 0.8, 1.0),

'vect__min_df': (1, 3, 5, 10),

'vect__ngram_range': ((1, 1), (1, 2)),

'vect__norm': ('l1', 'l2')}

from time import time

t0 = time()

random_search.fit(data_train.data, data_train.target)

print(f"Done in {time() - t0:.3f}s")

Fitting 5 folds for each of 40 candidates, totalling 200 fits

Done in 33.566s

print("Best parameters combination found:")

best_parameters = random_search.best_estimator_.get_params()

for param_name in sorted(parameter_grid.keys()):

print(f"{param_name}: {best_parameters[param_name]}")

Best parameters combination found:

clf__alpha: 0.01

vect__max_df: 0.2

vect__min_df: 1

vect__ngram_range: (1, 1)

vect__norm: l1

test_accuracy = random_search.score(data_test.data, data_test.target)

print(

"Accuracy of the best parameters using the inner CV of "

f"the random search: {random_search.best_score_:.3f}"

)

print(f"Accuracy on test set: {test_accuracy:.3f}")

Accuracy of the best parameters using the inner CV of the random search: 0.816

Accuracy on test set: 0.709

前缀 vect 和 clf 是为了避免流水线中可能的模糊性,但对于可视化结果不是必需的。正因为如此,我们定义了一个函数,它将重命名调优的超参数并提高可读性。

import pandas as pd

def shorten_param(param_name):

"""Remove components' prefixes in param_name."""

if "__" in param_name:

return param_name.rsplit("__", 1)[1]

return param_name

cv_results = pd.DataFrame(random_search.cv_results_)

cv_results = cv_results.rename(shorten_param, axis=1)

我们可以使用一个 plotly.express.scatter 可视化评分时间和平均测试分数(即“CV分数”)之间的权衡。将光标移到给定点上会显示相应的参数。误差条对应于在交叉验证的不同折叠中计算的一个标准偏差。

import plotly.express as px

param_names = [shorten_param(name) for name in parameter_grid.keys()]

labels = {

"mean_score_time": "CV Score time (s)",

"mean_test_score": "CV score (accuracy)",

}

fig = px.scatter(

cv_results,

x="mean_score_time",

y="mean_test_score",

error_x="std_score_time",

error_y="std_test_score",

hover_data=param_names,

labels=labels,

)

fig.update_layout(

title={

"text": "trade-off between scoring time and mean test score",

"y": 0.95,

"x": 0.5,

"xanchor": "center",

"yanchor": "top",

}

)

fig

请注意,图左上角的模型集群在准确性和评分时间之间具有最佳权衡。在这种情况下,使用二元组会增加所需的评分时间,而不会显着提高管道的准确性。

备注

有关如何自定义自动调优以最大化得分和最小化得分时间的更多信息,请参见示例笔记本 具有交叉验证的网格搜索自定义改装策略 .

我们还可以使用 plotly.express.parallel_coordinates 以进一步将平均测试分数可视化为调整后的超参数的函数。这有助于找到两个以上超参数之间的相互作用,并直观地了解它们与改善管道性能的相关性。

我们应用一个 math.log10 转型对 alpha 轴以扩大活动范围并提高情节的可读性。的值 \(x\) 在所述轴上应理解为 \(10^x\) .

import math

column_results = param_names + ["mean_test_score", "mean_score_time"]

transform_funcs = dict.fromkeys(column_results, lambda x: x)

# Using a logarithmic scale for alpha

transform_funcs["alpha"] = math.log10

# L1 norms are mapped to index 1, and L2 norms to index 2

transform_funcs["norm"] = lambda x: 2 if x == "l2" else 1

# Unigrams are mapped to index 1 and bigrams to index 2

transform_funcs["ngram_range"] = lambda x: x[1]

fig = px.parallel_coordinates(

cv_results[column_results].apply(transform_funcs),

color="mean_test_score",

color_continuous_scale=px.colors.sequential.Viridis_r,

labels=labels,

)

fig.update_layout(

title={

"text": "Parallel coordinates plot of text classifier pipeline",

"y": 0.99,

"x": 0.5,

"xanchor": "center",

"yanchor": "top",

}

)

fig

The parallel coordinates plot displays the values of the hyperparameters on different columns while the performance metric is color coded. It is possible to select a range of results by clicking and holding on any axis of the parallel coordinate plot. You can then slide (move) the range selection and cross two selections to see the intersections. You can undo a selection by clicking once again on the same axis.

特别是对于这种超参数搜索,有趣的是注意到,性能最好的模型似乎并不依赖于正规化 norm ,但它们确实取决于 max_df , min_df 以及正规化实力 alpha .原因在于,包括噪声特征(即, max_df 接近 \(1.0\) 或 min_df 接近 \(0\) )倾向于过度适应,因此需要更强的正规化来补偿。拥有更少的功能需要更少的规则化和更少的评分时间。

最佳准确度得分是在以下情况下获得的: alpha 之间 \(10^{-6}\) 和 \(10^0\) ,无论超参数如何 norm .

Total running time of the script: (0分35.067秒)

相关实例

Gallery generated by Sphinx-Gallery <https://sphinx-gallery.github.io> _