备注

Go to the end 下载完整的示例代码。或者通过浏览器中的MysterLite或Binder运行此示例

比较随机搜索和网格搜索用于超参数估计#

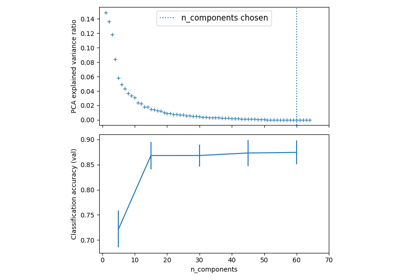

比较随机搜索和网格搜索以优化线性支持机的超参数与BCD训练。同时搜索影响学习的所有参数(估计量的数量除外,这会带来时间/质量的权衡)。

The randomized search and the grid search explore exactly the same space of parameters. The result in parameter settings is quite similar, while the run time for randomized search is drastically lower.

随机搜索的性能可能会稍微差一些,并且可能是由于噪音效应而导致的,并且不会延续到保留的测试集。

请注意,在实践中,人们不会使用网格搜索同时搜索这么多不同的参数,而是只选择被认为最重要的参数。

RandomizedSearchCV took 0.48 seconds for 15 candidates parameter settings.

Model with rank: 1

Mean validation score: 0.987 (std: 0.011)

Parameters: {'alpha': np.float64(0.01001911984591966), 'average': False, 'l1_ratio': np.float64(0.7665012035905148)}

Model with rank: 2

Mean validation score: 0.987 (std: 0.011)

Parameters: {'alpha': np.float64(0.40134964872774576), 'average': False, 'l1_ratio': np.float64(0.05033776045421079)}

Model with rank: 3

Mean validation score: 0.983 (std: 0.011)

Parameters: {'alpha': np.float64(0.1352374671440465), 'average': False, 'l1_ratio': np.float64(0.6719936995475292)}

GridSearchCV took 2.89 seconds for 60 candidate parameter settings.

Model with rank: 1

Mean validation score: 0.994 (std: 0.005)

Parameters: {'alpha': np.float64(0.1), 'average': False, 'l1_ratio': np.float64(0.1111111111111111)}

Model with rank: 2

Mean validation score: 0.991 (std: 0.008)

Parameters: {'alpha': np.float64(0.1), 'average': False, 'l1_ratio': np.float64(0.0)}

Model with rank: 3

Mean validation score: 0.989 (std: 0.018)

Parameters: {'alpha': np.float64(1.0), 'average': False, 'l1_ratio': np.float64(0.0)}

# Authors: The scikit-learn developers

# SPDX-License-Identifier: BSD-3-Clause

from time import time

import numpy as np

import scipy.stats as stats

from sklearn.datasets import load_digits

from sklearn.linear_model import SGDClassifier

from sklearn.model_selection import GridSearchCV, RandomizedSearchCV

# get some data

X, y = load_digits(return_X_y=True, n_class=3)

# build a classifier

clf = SGDClassifier(loss="hinge", penalty="elasticnet", fit_intercept=True)

# Utility function to report best scores

def report(results, n_top=3):

for i in range(1, n_top + 1):

candidates = np.flatnonzero(results["rank_test_score"] == i)

for candidate in candidates:

print("Model with rank: {0}".format(i))

print(

"Mean validation score: {0:.3f} (std: {1:.3f})".format(

results["mean_test_score"][candidate],

results["std_test_score"][candidate],

)

)

print("Parameters: {0}".format(results["params"][candidate]))

print("")

# specify parameters and distributions to sample from

param_dist = {

"average": [True, False],

"l1_ratio": stats.uniform(0, 1),

"alpha": stats.loguniform(1e-2, 1e0),

}

# run randomized search

n_iter_search = 15

random_search = RandomizedSearchCV(

clf, param_distributions=param_dist, n_iter=n_iter_search

)

start = time()

random_search.fit(X, y)

print(

"RandomizedSearchCV took %.2f seconds for %d candidates parameter settings."

% ((time() - start), n_iter_search)

)

report(random_search.cv_results_)

# use a full grid over all parameters

param_grid = {

"average": [True, False],

"l1_ratio": np.linspace(0, 1, num=10),

"alpha": np.power(10, np.arange(-2, 1, dtype=float)),

}

# run grid search

grid_search = GridSearchCV(clf, param_grid=param_grid)

start = time()

grid_search.fit(X, y)

print(

"GridSearchCV took %.2f seconds for %d candidate parameter settings."

% (time() - start, len(grid_search.cv_results_["params"]))

)

report(grid_search.cv_results_)

Total running time of the script: (0分3.380秒)

相关实例

Gallery generated by Sphinx-Gallery <https://sphinx-gallery.github.io> _