备注

Go to the end 下载完整的示例代码。或者通过浏览器中的MysterLite或Binder运行此示例

排列重要性与随机森林特征重要性(Millennium)#

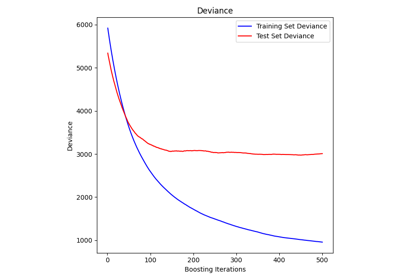

在本例中,我们将比较基于杂质的特征重要性 RandomForestClassifier 利用巨大数据集上的排列重要性 permutation_importance .我们将表明,基于杂质的特征重要性可以夸大数字特征的重要性。

此外,随机森林的基于杂质的特征重要性会受到基于训练数据集得出的统计数据的计算的影响:即使对于无法预测目标变量的特征,重要性也可能很高,只要模型有能力使用它们来进行过适应。

此示例展示了如何使用排列重要性作为可以减轻这些限制的替代方案。

引用

# Authors: The scikit-learn developers

# SPDX-License-Identifier: BSD-3-Clause

数据加载和特征工程#

让我们使用pandas加载泰坦尼克号数据集的副本。下面展示了如何对数字特征和分类特征应用单独的预处理。

我们进一步包括两个与目标变量没有任何相关的随机变量 (survived ):

random_num是一个高基数数字变量(唯一值与记录一样多)。random_cat是低基数分类变量(3个可能的值)。

import numpy as np

from sklearn.datasets import fetch_openml

from sklearn.model_selection import train_test_split

X, y = fetch_openml("titanic", version=1, as_frame=True, return_X_y=True)

rng = np.random.RandomState(seed=42)

X["random_cat"] = rng.randint(3, size=X.shape[0])

X["random_num"] = rng.randn(X.shape[0])

categorical_columns = ["pclass", "sex", "embarked", "random_cat"]

numerical_columns = ["age", "sibsp", "parch", "fare", "random_num"]

X = X[categorical_columns + numerical_columns]

X_train, X_test, y_train, y_test = train_test_split(X, y, stratify=y, random_state=42)

我们基于随机森林定义了一个预测模型。因此,我们将进行以下预处理步骤:

使用

OrdinalEncoder对类别特征进行编码;使用

SimpleImputer使用平均策略填充数字特征的缺失值。

from sklearn.compose import ColumnTransformer

from sklearn.ensemble import RandomForestClassifier

from sklearn.impute import SimpleImputer

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import OrdinalEncoder

categorical_encoder = OrdinalEncoder(

handle_unknown="use_encoded_value", unknown_value=-1, encoded_missing_value=-1

)

numerical_pipe = SimpleImputer(strategy="mean")

preprocessing = ColumnTransformer(

[

("cat", categorical_encoder, categorical_columns),

("num", numerical_pipe, numerical_columns),

],

verbose_feature_names_out=False,

)

rf = Pipeline(

[

("preprocess", preprocessing),

("classifier", RandomForestClassifier(random_state=42)),

]

)

rf.fit(X_train, y_train)

模型精度#

在检查特征重要性之前,重要的是检查模型预测性能是否足够高。事实上,人们对检查非预测模型的重要特征几乎没有兴趣。

print(f"RF train accuracy: {rf.score(X_train, y_train):.3f}")

print(f"RF test accuracy: {rf.score(X_test, y_test):.3f}")

RF train accuracy: 1.000

RF test accuracy: 0.814

在这里,可以观察到训练准确性非常高(森林模型有足够的容量来完全记住训练集),但由于随机森林的内置装袋,它仍然可以足够好地推广到测试集。

通过限制树的容量(例如通过设置),可以用训练集的一定准确性换取测试集稍好的准确性 min_samples_leaf=5 或 min_samples_leaf=10 )以便限制过度配合,同时不会引入太多的不足配合。

然而,让我们暂时保留高容量随机森林模型,以便我们可以说明具有许多唯一值的变量的特征重要性的一些陷阱。

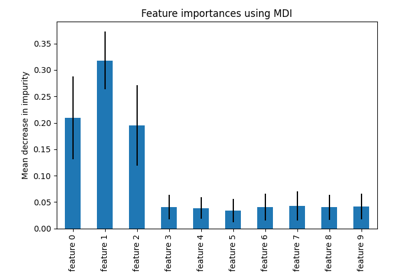

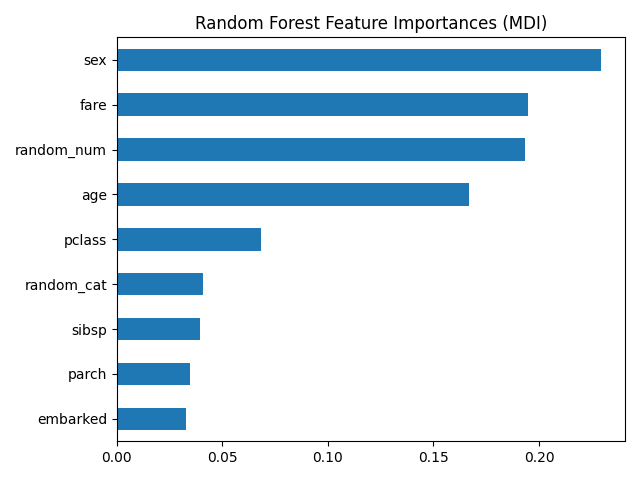

从平均杂质减少(MDI)看树木的特征重要性#

基于杂质的特征重要性将数字特征列为最重要的特征。因此,非预测性 random_num 变量被列为最重要的功能之一!

这个问题源于基于杂质的特征重要性的两个限制:

基于杂质的重要性偏向高基数特征;

基于杂质的重要性是根据训练集统计数据计算的,因此不反映特征有助于做出推广到测试集的预测的能力(当模型具有足够的容量时)。

The bias towards high cardinality features explains why the random_num has

a really large importance in comparison with random_cat while we would

expect that both random features have a null importance.

我们使用训练集统计数据这一事实解释了为什么 random_num 和 random_cat 特征的重要性非空。

import pandas as pd

feature_names = rf[:-1].get_feature_names_out()

mdi_importances = pd.Series(

rf[-1].feature_importances_, index=feature_names

).sort_values(ascending=True)

ax = mdi_importances.plot.barh()

ax.set_title("Random Forest Feature Importances (MDI)")

ax.figure.tight_layout()

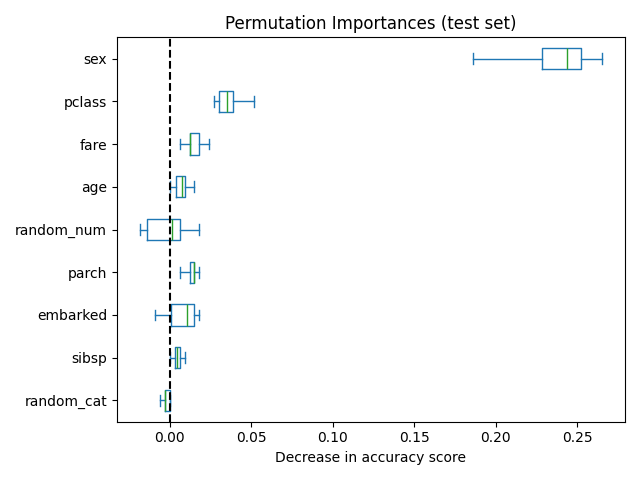

作为替代, rf 是在坚持的测试集上计算的。这表明低基数的范畴特征, sex 和 pclass 是最重要的特征。事实上,排列这些特征的值将导致测试集中模型的准确性评分下降最多。

另外,请注意,正如预期的那样,这两个随机特征的重要性都非常低(接近0)。

from sklearn.inspection import permutation_importance

result = permutation_importance(

rf, X_test, y_test, n_repeats=10, random_state=42, n_jobs=2

)

sorted_importances_idx = result.importances_mean.argsort()

importances = pd.DataFrame(

result.importances[sorted_importances_idx].T,

columns=X.columns[sorted_importances_idx],

)

ax = importances.plot.box(vert=False, whis=10)

ax.set_title("Permutation Importances (test set)")

ax.axvline(x=0, color="k", linestyle="--")

ax.set_xlabel("Decrease in accuracy score")

ax.figure.tight_layout()

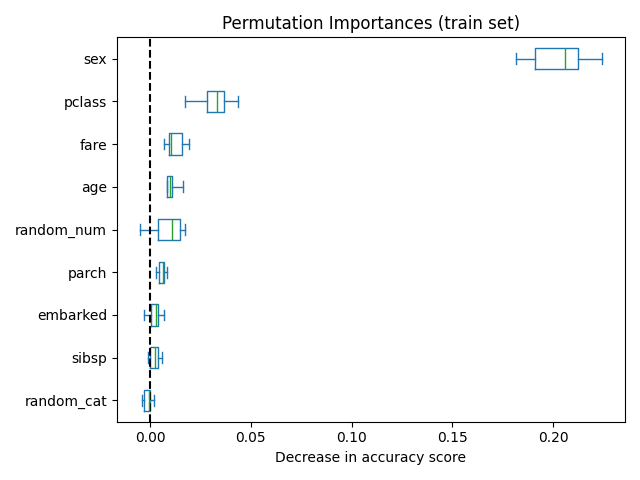

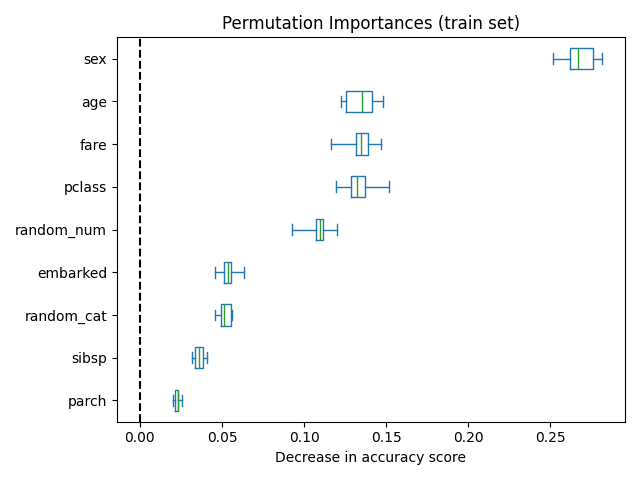

还可以计算训练集中的排列重要性。这表明 random_num 和 random_cat 获得比在测试集中计算时明显更高的重要性排名。这两个图之间的差异证实了RF模型有足够的能力使用随机数字和分类特征来过适应。

result = permutation_importance(

rf, X_train, y_train, n_repeats=10, random_state=42, n_jobs=2

)

sorted_importances_idx = result.importances_mean.argsort()

importances = pd.DataFrame(

result.importances[sorted_importances_idx].T,

columns=X.columns[sorted_importances_idx],

)

ax = importances.plot.box(vert=False, whis=10)

ax.set_title("Permutation Importances (train set)")

ax.axvline(x=0, color="k", linestyle="--")

ax.set_xlabel("Decrease in accuracy score")

ax.figure.tight_layout()

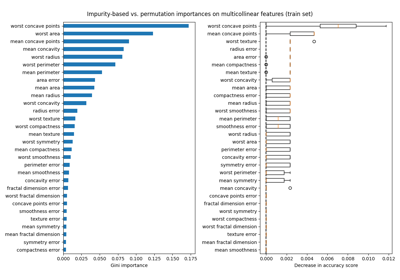

我们可以通过设置限制树木的过适应容量来进一步重新尝试实验 min_samples_leaf 20个数据点。

rf.set_params(classifier__min_samples_leaf=20).fit(X_train, y_train)

观察训练和测试集中的准确性分数,我们观察到这两个指标现在非常相似。因此,我们的模型不再过度适合。然后我们可以检查这个新模型的排列重要性。

print(f"RF train accuracy: {rf.score(X_train, y_train):.3f}")

print(f"RF test accuracy: {rf.score(X_test, y_test):.3f}")

RF train accuracy: 0.810

RF test accuracy: 0.832

train_result = permutation_importance(

rf, X_train, y_train, n_repeats=10, random_state=42, n_jobs=2

)

test_results = permutation_importance(

rf, X_test, y_test, n_repeats=10, random_state=42, n_jobs=2

)

sorted_importances_idx = train_result.importances_mean.argsort()

train_importances = pd.DataFrame(

train_result.importances[sorted_importances_idx].T,

columns=X.columns[sorted_importances_idx],

)

test_importances = pd.DataFrame(

test_results.importances[sorted_importances_idx].T,

columns=X.columns[sorted_importances_idx],

)

for name, importances in zip(["train", "test"], [train_importances, test_importances]):

ax = importances.plot.box(vert=False, whis=10)

ax.set_title(f"Permutation Importances ({name} set)")

ax.set_xlabel("Decrease in accuracy score")

ax.axvline(x=0, color="k", linestyle="--")

ax.figure.tight_layout()

现在,我们可以观察到,在这两个场景中, random_num 和 random_cat 与过拟随机森林相比,特征的重要性较低。然而,关于其他特征重要性的结论仍然有效。

Total running time of the script: (0分5.110秒)

相关实例

Gallery generated by Sphinx-Gallery <https://sphinx-gallery.github.io> _